Adaptive Prototype Learning and Allocation for

Few-Shot Segmentation

CVPR 2021

Gen Li1, 3 Varun Jampani2 Laura Sevilla-Lara1 Deqing Sun2 Jonghyun Kim3 Joongkyu Kim 3

1University of Edinburgh 2Google Research 3Sungkyunkwan University

Abstract

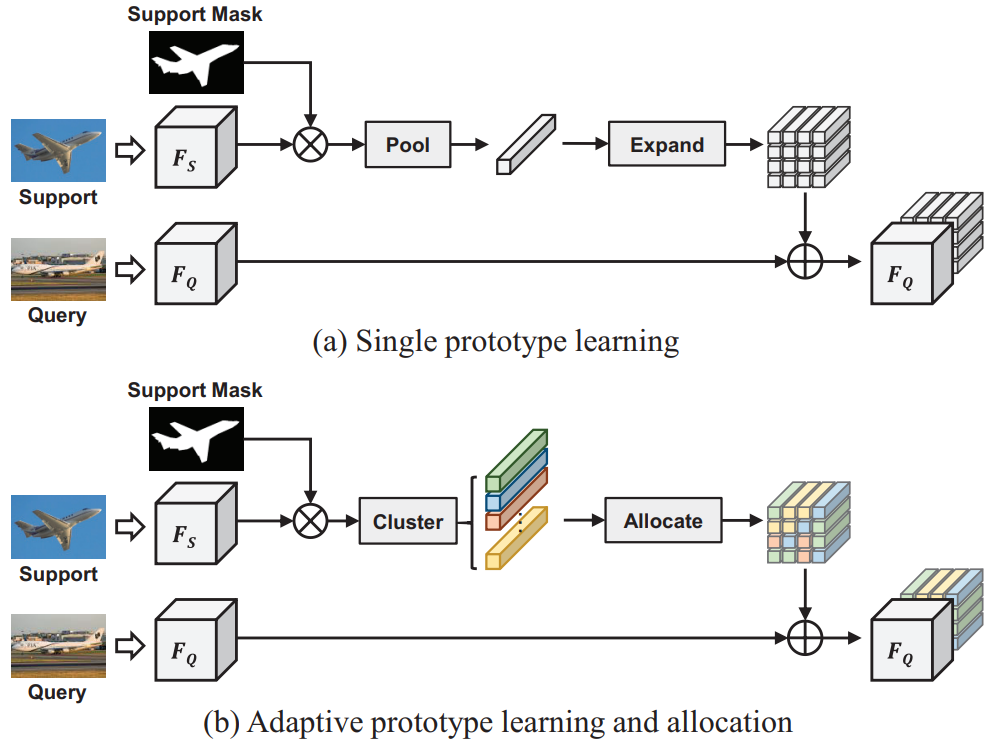

Prototype learning is extensively used for few-shot segmentation. Typically, a single prototype is obtained from the support feature by averaging the global object information. However, using one prototype to represent all the information may lead to ambiguities. In this paper, we propose two novel modules, named superpixel-guided clustering (SGC) and guided prototype allocation (GPA), for multiple prototype extraction and allocation. Specifically, SGC is a parameter-free and training-free approach, which extracts more representative prototypes by aggregating similar feature vectors, while GPA is able to select matched prototypes to provide more accurate guidance. By integrating the SGC and GPA together, we propose the Adaptive Superpixelguided Network (ASGNet), which is a lightweight model and adapts to object scale and shape variation. In addition, our network can easily generalize to k-shot segmentation with substantial improvement and no additional computational cost. In particular, our evaluations on COCO demonstrate that ASGNet surpasses the state-of-the-art method by 5% in 5-shot segmentation.

Video Summary

Qualitative Results

Citation

@inproceedings{li:asgnet:2021,

title={Adaptive prototype learning and allocation for few-shot segmentation},

author={Li, Gen and Jampani, Varun and Sevilla-Lara, Laura and Sun, Deqing and Kim, Jonghyun and Kim, Joongkyu},

booktitle={Proceedings of the IEEE/CVF conference on computer vision and pattern recognition},

year={2021}

}