One-Shot Open Affordance Learning

with Foundation Models

CVPR 2024

Gen Li1 Deqing Sun2 Laura Sevilla-Lara1 Varun Jampani3

1University of Edinburgh 2Google Research 3Stability AI

Abstract

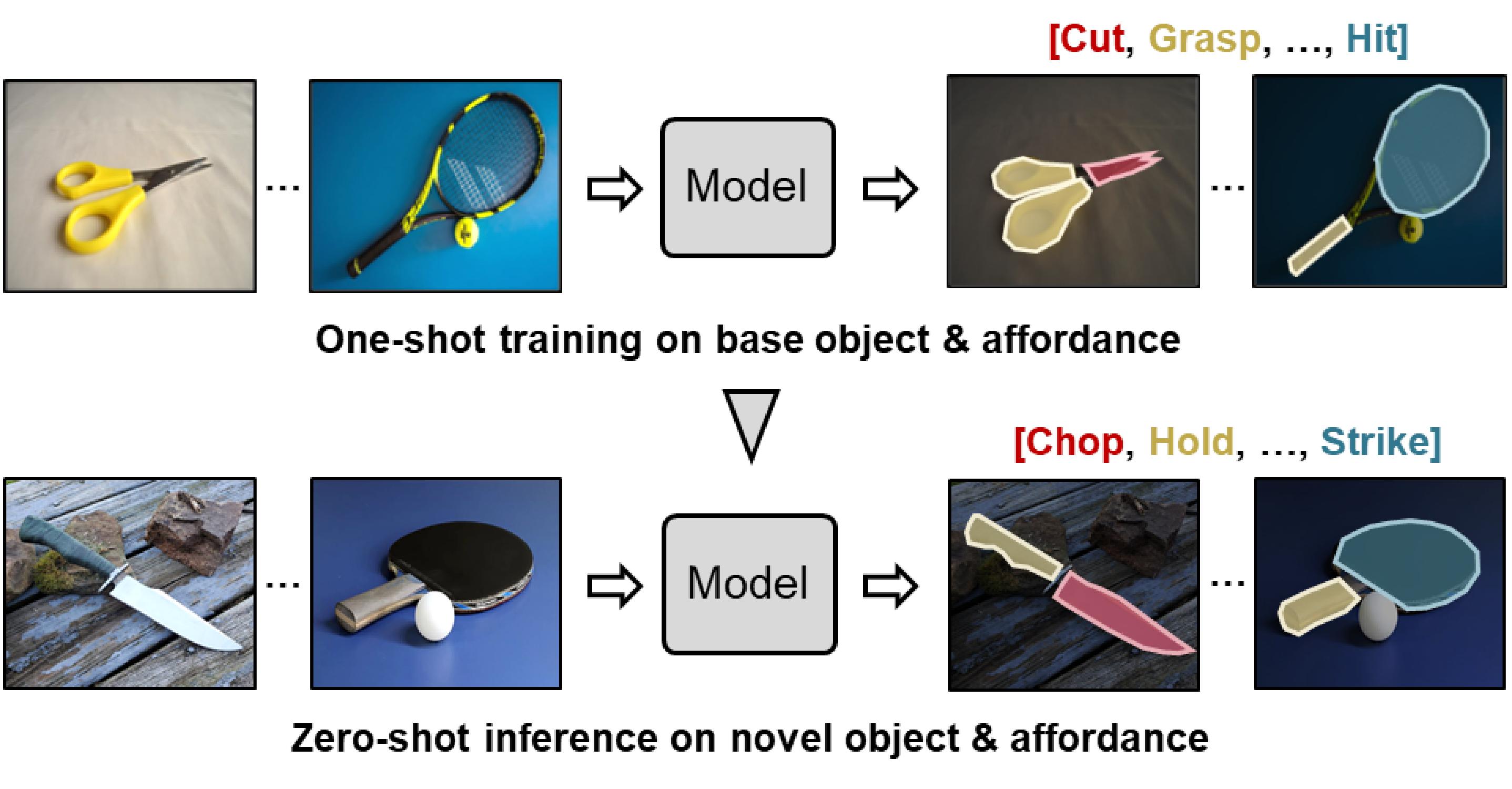

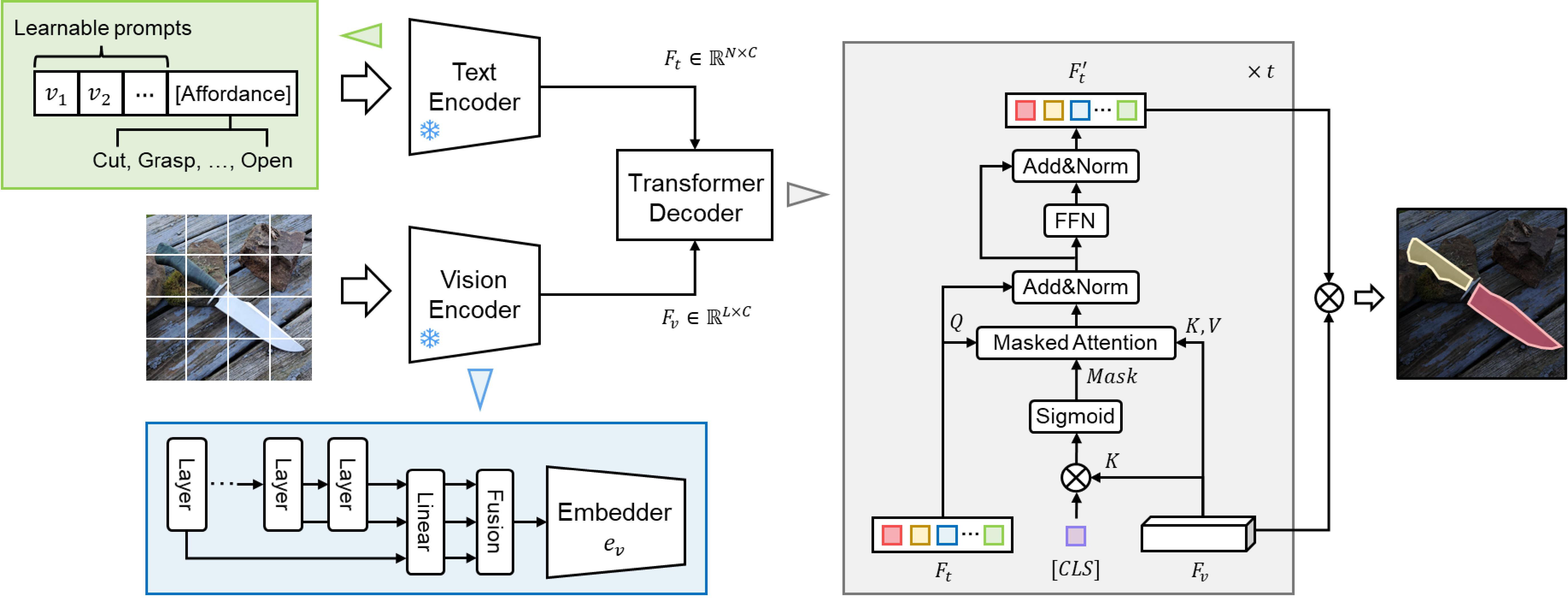

We introduce One-shot Open Affordance Learning (OOAL), where a model is trained with just one example per base object category, but is expected to identify novel objects and affordances. While vision-language models excel at recognizing novel objects and scenes, they often struggle to understand finer levels of granularity such as affordances. To handle this issue, we conduct a comprehensive analysis of existing foundation models, to explore their inherent understanding of affordances and assess the potential for data-limited affordance learning. We then propose a vision-language framework with simple and effective designs that boost the alignment between visual features and affordance text embeddings. Experiments on two affordance segmentation benchmarks show that the proposed method outperforms state-of-the-art models with less than 1% of the full training data, and exhibits reasonable generalization capability on unseen objects and affordances.

Framework

Video Summary

Citation

@inproceedings{li:ooal:2024,

title = {One-Shot Open Affordance Learning with Foundation Models},

author = {Li, Gen and Sun, Deqing and Sevilla-Lara, Laura and Jampani, Varun},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

year={2024}

}